We are committed to the computer vision research of 3D artificial intelligence and engaged in 3D hand gesture recognition, face recognition, human skeleton recognition and 3D reconstruction research using point cloud and machine learning algorithm. Meanwhile, we have a cooperative relationship with many enterprises and complete R & D projects with both scientific research value and practical value. We aim to build a top artificial intelligence research team.

Hand Gesture Recognition

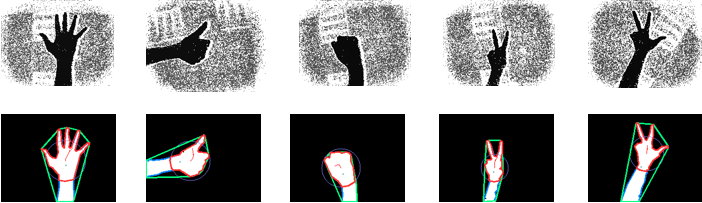

Depth camera, such as Kinect, RealSense and so on, is not affected by light, texture, color and other factors. Relative to the traditional RGB camera, depth camera can adapt to more complex environments and get more features. We use TOF depth camera to collect image information and we developed a SDK to help us label data, which can fast record and play the data from 3D TOF depth camera.

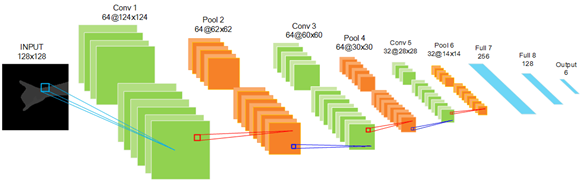

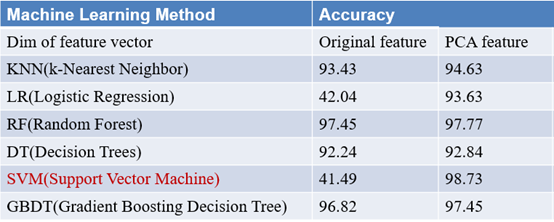

After foreground and background segmentation and relevant preprocessing, we extract the hand features and train SVM and CNN model, using our own depth datasets. The accuracy of static gesture recognition is more than 98%. We compared some kinds of traditional machine learning classification methods. According to the accuracy result, we choose the SVM algorithm. Meanwhile, we also design a Convolutional Neural Network that performs better for hand pose recognition. The architecture of CNN is shown on the figure below.

Architecture of Hand Pose Classification Convolutional Neural Network.

Comparison of some traditional machine learning classification methods.

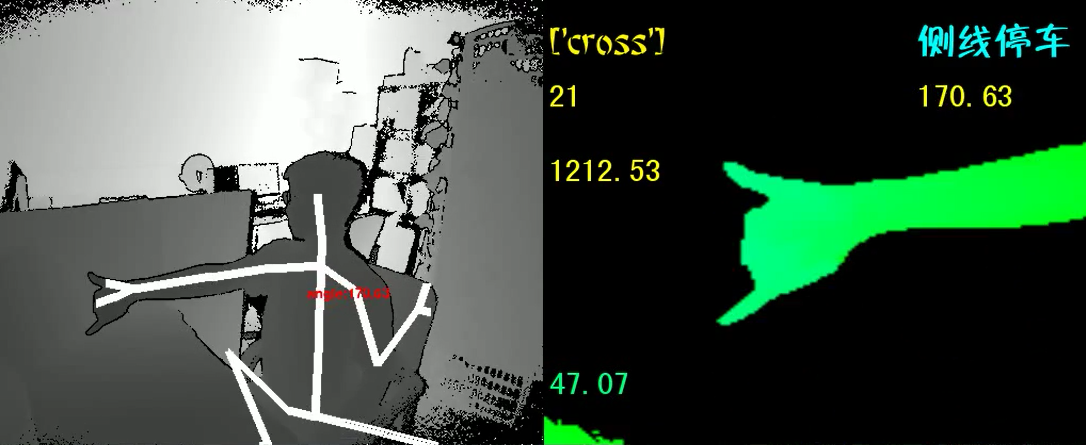

In addition, a set of dynamic hand gesture sequence can also be well recognized through our model matching algorithm. At present, we have verified that our hand gesture recognition algorithm has excellent performance in the laboratory, office, train and car environments.

Real-time dynamic hand gesture recognition for train drivers.

Here are the static and dynamic hand gesture recognition demos. The predefined hand gestures can be accurately recognized.

DEMOs:

Real-time static hand pose recognition for smart interaction in car:

Real-time dynamic hand gesture recognition for train drivers:

Hand gesture record and labeling SDK:

Fingertip Recognition

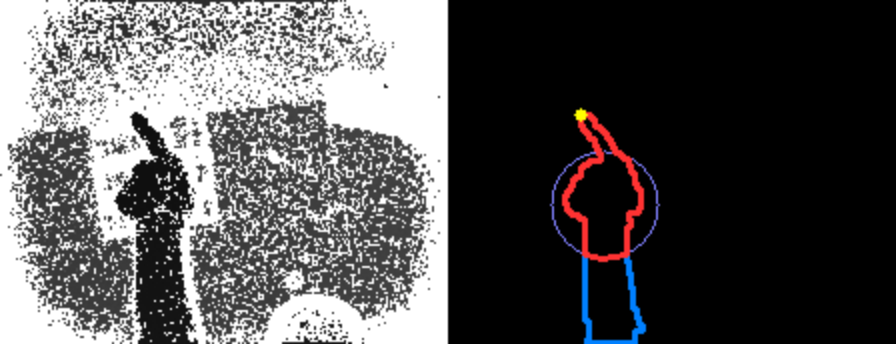

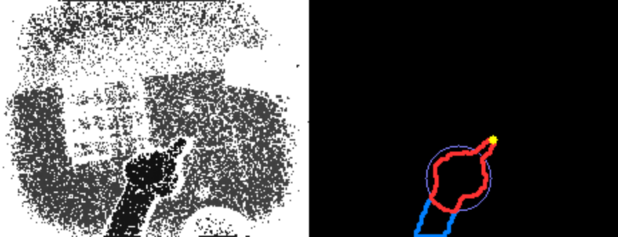

We preprocess the image sequence taken from the depth camera to extract the hand and find the palm center. Then we use two methods to detect the position of fingertip in each frame. One is based on 2D contour information and the other is based on 3D depth information. Taking both methods into consideration, we choose the more valid position as the final position. While both of the two methods lack the reliability, we would use the detected position based on a Kalman filter. On the base of fingertip detection and tracking, we define several simple actions and realize its recognition.

Fingertip detection and tracking.

Here is the fingertip recognition demo. We can identify different fingertip actions such as circle, line, up to down, left to right, “V” and so on.

DEMOs:

Fingertips Recognition:

3D Reconstruction

Using depth camera, for example, Kinect for Windows. Now our reconstruction is aiming at single object. We implement it in two ways, one is fixing the object we need to reconstruct on a stable turntable, then get the object turning data with fixed the camera; other is fixing the object, then use the handheld camera to collect the data by walking around the object.

Firstly, we reconstruct the object by registering the point clouds directly when we use turntable. Then, we use ICP for 3D point clouds to register it when we use handheld camera. In the future, we will apply the Deep Learning to object reconstruction, which can reconstruct complete object with one depth image.

The Three Dimension Reconstruction has a very wide range of applications, such as industrial components, medical images and so on. We want to apply our technic to electronic business that want to show their merchandise to the customers.