Navigation and Location Group focuses on building a robust system that can work in a known but not precisely mapped area. This group is arranged into two subgroups according to research fields. One is working on mapping the area and locating the robot using many kinds of sensors, including camera, imu, lidar and so on. The other tries to program the robot to go around the area safely based on the map and location got from the former subgroup. The main sensor we used is camera that we focus on the vision navigation and vision-based fusion location.

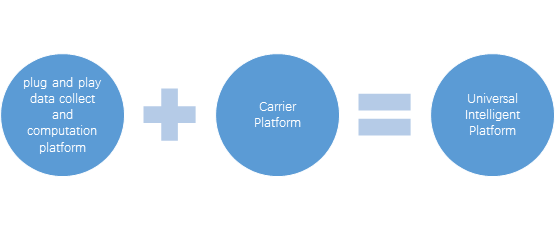

We want to build a universal intelligent platform which can used to work on ground or on air. The structure of platform is as folloing:

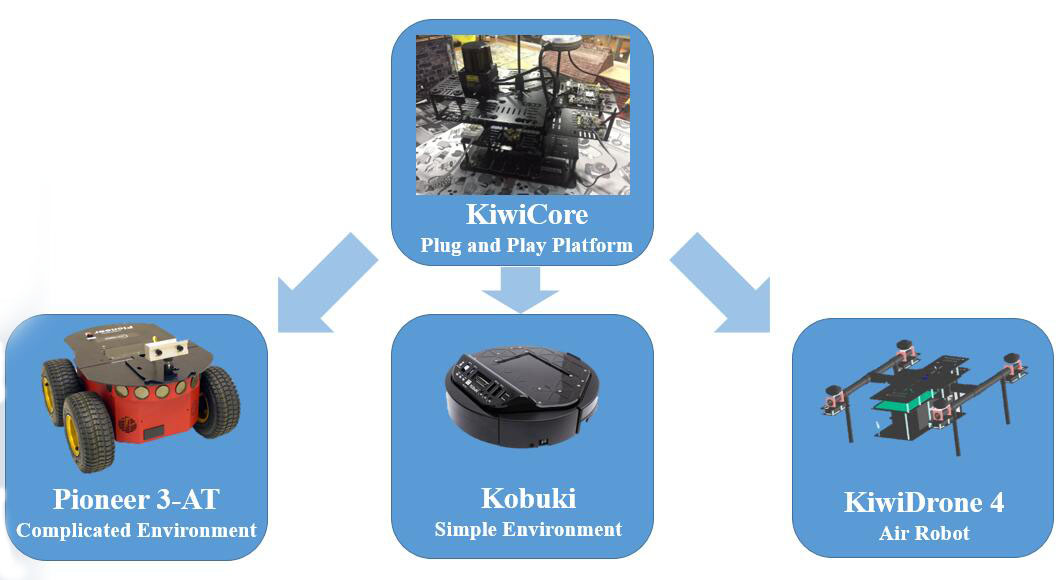

There are mainly three platforms for testing our algorithms, which are KiwiDrone(a quadrotor built by the group), hand-held platform(a simplified platform of KiwiDrone) and Poineer 3-AT(bought from some company).

Research

Vision navigation:

Vision navigation uses cameras or RGB-D camera to sense world for getting the information of the environment. Through analying the spatia information, the obstacle, relative position, moving path are obtained. At last, navigte the robot to get to the destination.

Vision-based fusion location

Vision-based fusion location use vison as the primary information, combining different type of sensors like IMU, GPS, Lidar, to infer the robot position. The fusion is divided into loose coupling and close coupling. We mainly focus on the close coupling.

Project

Kiwi Drone

This project aims to create a smart, safe, swarm MAV. There are at least 7 sensors used in our MAV platform with a high-performance computation board like Odroid XU4, NUC. Mainly, the platform is used to verify our navigation, location and path planning algorithms in several kinds of environment.

Autonomous Ground Robot

This project aims to develop a robust autonomous ground robot system applied to a wide variety of scenes. It will be able to work according to given task and autonomously explore new environment without priori information. During the exploration, the robot can reconstruct the scene in sparse 3D map and/or in semantic object-level map, and recover its own trajectory in real time.

Paper list

1. Wang, R., Zou, D., Pei, L., Liu, P., & Xu, C. (2016). Velocity Prediction for Multi-rotor UAVs Based on Machine Learning. In China Satellite Navigation Conference (CSNC) 2016 Proceedings: Volume II (pp. 487-500). Springer, Singapore.

2. Wang, R., Zou, D., Pei, L., Liu, P., & Xu, C. An Aerodynamic Model-Aided State Estimator for Multi-Rotor UAVs. In Intelligent Robots and Systems (IROS), 2017 IEEE/RSJ International Conference. IEEE.

3. Gong Z, Pei L, Zou D, et al. Graphical Approach for MAV Sensors Fusion[C]// The, International Technical Meeting of the Satellite Division of the Institute of Navigation. Institute of Navigation. 2016.